I’m writing this to organize my thoughts and have a reference for colleagues, but I’m happy to share it as well, so let’s get to it!

Audio version of the article

Recording length: 16 minutes It’s a short talk on this topic from Google NotebookLM.

Do you know how many AI bots crawl your website?

You might think that all traffic to your site comes from people. That’s a mistake, today a significant share of traffic is generated by robots and agents powered by artificial intelligence. These AI systems crawl the web at scale, and if you don’t count them, you’re missing an important part of the picture.

Why should you care? AI bots affect everything, from server load and SEO to how your content and brand show up in search results or chatbot answers. Heads up: If you ignore AI visits, it can cost you money, web performance, and reputation.

The following overview shows how to measure and analyze AI traffic from different angles. You’ll learn where to collect the necessary data (from server logs to Google Analytics), what to focus on when evaluating it, and how to tell whether AI is helping you more than it harms you.

Hosting vs. frontend data: where to find AI activity

The first step is to obtain reliable data about when and how AI bots visit your site. There are two main paths for this: server logs and frontend (browser-side) measurement.

- Access logs – Server access logs (+CDN logs, if you have a CDN cache) record all requests to the server, including those from AI bots. It doesn’t matter whether you have cache layers or not, every hit shows up in the log. That means you can reliably see bot activity from logs, which is the basis for monitoring costs and security. From access logs you can easily read how many requests came from which user-agent and audit the website’s overall traffic.

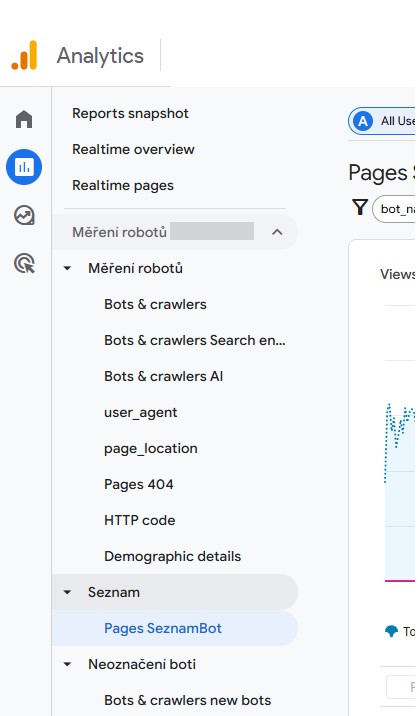

- Frontend measurement (with and without JS) – Besides the server, you can measure visits in the browser itself via JavaScript (e.g., the Google Analytics script). There’s a catch: most bots don’t execute JavaScript. If you only track standard GA4 data, AI bots won’t appear there at all. You can then distinguish humans from bots by the User-Agent string, for example. Thanks to frontend measurement, you can separate real users from AI agents, which is crucial for counting conversions correctly and protecting against unwanted content scraping. I also have an article on this: Measuring robots in Google Analytics 4: See how search engines crawl your site. You can then use it for the measurements in the next section of the page.

Do AI crawlers go where you want them to?

Next question: do AI crawlers browse only where you welcome them, or do they go where they shouldn’t? It’s time to find out whether they respect your rules and how they move through your content.

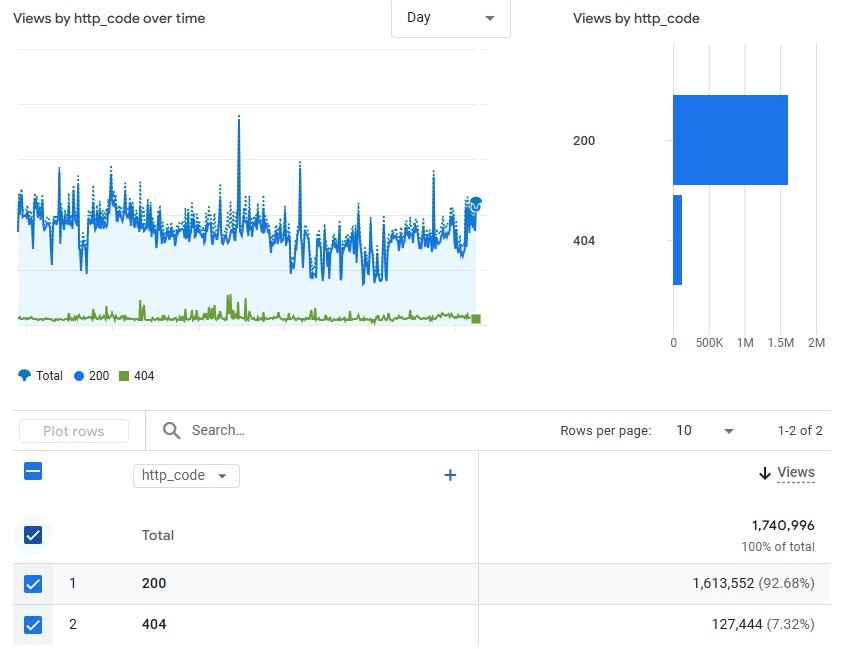

- HTTP status codes (3xx, 4xx, 5xx) – Check which responses your server returns to AI bots. Do they often hit redirects (3xx)? That may indicate they’re indexing outdated URLs and wasting crawl budget. Seeing lots of 404 errors (4xx)? Then they’re trying URLs that don’t exist, perhaps due to bad links or an outdated sitemap. And what about server errors (5xx)? Those may mean the bot is overloading the site. These status codes show the efficiency of your site structure and help uncover weak spots that need fixing (or closer monitoring).

- Bad and non-canonical URLs – Watch for bots hitting odd addresses with incorrect parameters or duplicate URLs. If an AI crawler indexes URLs with unsuitable parameters that lead to the same content (e.g., different letter casing, trailing slash vs. none), you’ve uncovered a canonicalization problem. It’s worth finding and fixing these weaknesses, you’ll improve SEO, reduce server load, and prevent AI from feeding its models with messy data.

- Restricted pages (robots.txt, X-Robots-Tag) – Test how AI bots behave toward content you’ve marked as off-limits. Do they respect your robots.txt and X-Robots-Tag? Some of these new AI crawlers don’t care about your restrictions and will go where they shouldn’t. That’s crucial for security, if bots ignore your rules, you’ll need other defenses (e.g., blocking IPs or requests by user-agent).

- Use of sitemap – Examine whether AI crawlers use your sitemap.xml. Do bots mostly follow links, or do they diligently download the sitemap and follow it? If you see requests to sitemap.xml in access logs, that’s a good sign, it suggests the bot wants to index important content systematically. When AI properly adopts your sitemap, it’s more likely not to miss key pages. That’s ideal for targeted indexing and better content availability in the AI world.

- Visit regularity (freshness) – Track how often individual AI bots return to your site. Do they stop by once a month, or do they check for new content daily? Regular visits indicate they watch your site for updates, which is great news, it means your new content will quickly get on AI’s radar. Test it: publish something new and watch how long it takes AI to notice. That’s how you measure your site’s “freshness” and adjust your publishing calendar to your advantage.

AI bots and your server: performance, costs, security

Not all bots are the same. Sometimes AI bots are welcome helpers (indexing your content for search engines), other times they’re uninvited resource hogs. From the server perspective, it’s important to know how many resources AI bots consume and whether that impacts services for real visitors.

- Is everyone indexing you? – Are you sure all relevant AI bots actually index you? You might be surprised that your own hosting can block some bots before they reach the site. Heads up: Some hosting providers or firewalls automatically filter “unknown” robots (by User-Agent string or IP range) to save compute resources. Sometimes they don’t even realize it: a burst of activity from some new URL spikes server load, and they simply block it, without knowing it themselves. In the end, you may be invisible to AI models because they can’t reach you. It’s worth testing occasionally: try accessing your site from different AI bots (or use services that do it) and verify none are silently blocked.

- AI load in percent – Find out what share of total traffic (requests, data volume) comes from AI bots. Is it 5% or 50%? This number is eye-opening. It used to be common that a small blog saw ~70% of server traffic from crawlers (Googlebot, SeznamBot, etc.). Generally, the larger the site, the smaller the crawlers’ share should be. A high percentage from bots signals excessive use of your resources by automated scripts. That’s essential for planning server scaling and budgeting, you may need better infrastructure or, conversely, to rein in bots a bit. I’ve even seen AdSense verification bots load infrastructure so much that it cost thousands of euros weekly. It was a bug on Google’s side, but it still cost us real money.

- Costs of bots – Calculate the financial impact of bots. How much does serving their requests cost you? You can estimate from data on transferred gigabytes and CPU/RAM load. If you know what percentage of traffic is bots and how much you pay for hosting, you’ll quickly get the approximate amount you “spend on bots” monthly. You may find AI bots cost you more than human visitors without bringing a single conversion. That’s a basis for deciding whether to block some bots or at least slow them down. I generally recommend throttling. Also consider that you’re effectively paying for PR in LLM models, and by blocking, you might sacrifice the future because your site loses reputation.

- Load spikes during AI activity – Monitor when the highest server load occurs and whether it correlates with AI crawler surges (e.g., summer 2025 and OpenAI 🙂 rolling out a big GPT-5 update). You may discover regular spikes when your site slows or exhausts PHP threads, and the culprit isn’t a customer rush but a hungry bot. You need to detect these peaks. The aim is to prevent outages and keep performance stable even during bot raids.

- Crawl speed and balance among bots – Not all bots behave the same. Explore how fast and aggressively individual AI crawlers traverse your site. Also remember some bots just download data and bring no traffic at all. In my case, ChatGPT often accounts for 95%* of AI-driven visits. (*Note: AI Overviews and AI mode can’t be separated from Google organic.)

- Bot verification and spoofing – Don’t trust everything that claims to be Googlebot or ChatGPT. Malicious actors sometimes masquerade as well-known crawlers to bypass security. Verify whether a bot is who it says it is, for example by checking IP ranges (Googlebots crawl from specific ranges) or using a reverse DNS lookup. Detecting spoofing (impersonation of a legitimate bot) protects you from unauthorized snooping and data theft. It’s more advanced, but crucial for web security.

What do AIs say about you? (Mentions of your brand in LLMs)

Another measurement dimension comes with large language models (LLMs) like GPT-5, Claude, or Gemini. These models may mention your site or brand in their answers, and you should know what and how they say it. It’s basically reputation monitoring in the AI era.

- Mentions by model – Start by exploring mentions of your site/brand across different AI systems. Each model (and version) can have a different “opinion” and information. Compare, for example, ChatGPT vs. Claude vs. Gemini vs. Grok: does one mention your product more often than another? Is one more accurate, or does it hallucinate? These differences reveal where you have the strongest “AI visibility” and where to focus (e.g., optimize content for a specific platform).

- Brand mentions and frequency – Record how often your brand or site appears in AI answers overall. If for a general industry question your brand regularly appears as an example or recommendation, that signals decent trustworthiness in AI’s eyes (and thus users reading those answers). The higher the visibility, the better for your brand, context still matters, though.

- Sentiment of mentions – It’s not just that AI mentions you, but how. Are mentions positive (“We recommend site X as a great resource”), neutral, or negative? Evaluate the sentiment of each mention. If AI spreads mostly negative impressions about your company (e.g., “Company X had a data leak in the past…”), it can subtly damage your reputation. For negative sentiment, find out why and work on remediation (factually or via better PR).

- Competitors and their image – Likewise, examine how your competitors appear in AI answers. Are they mentioned more often? In a better light? Comparing competitor sentiment and frequency shows how you fare in the industry’s “AI conversation.” It may turn out competitor sites are preferred by AI models as sources, and you’ll want to learn why. This analysis is valuable for content strategy and marketing: reveal where competitors lead and try to catch up with quality content. In principle, the ratio between you and competitors matters more than raw counts.

- Links to your site – Track whether AI-generated answers include direct links to your site. For example, Google AI Overviews and AI mode chat sometimes list sources (not often) they drew from (usually randomly for the topic), and if your site is among them, that’s a direct traffic channel. The more links AI offers to you, the more curious users may click. This is essentially a new type of “backlink,” except it exists only in the context of an AI answer. It’s definitely worth monitoring.

- How often do links lead to errors? – If AI links to your site, check whether those clicks end up on a 404 page. It happens, AI may cite old content that no longer exists, or it may “invent” a link imprecisely (yes, that happens). Such cases cost reputation and visits. Review which AIs generate links to non-existent pages and, if you can, set redirects (301) to a working URL or restore the content. This often plagues JavaScript-heavy sites since they’re more expensive to crawl, so their content is often stale.

- Unique content in answers – Check whether AI models reproduce your content verbatim in their answers. If your site offers unique data or phrasing and AI starts quoting it (even without a link), that’s both good and bad. Good because your content was recognized as a quality source and made it into AI “knowledge.” Bad because you may lose visits, users read it in AI and never come to your site. A strategy like watermarking can help.

- Watermarking content – Consider marking your text with a subtle signature (watermark), e.g., a specific phrasing or character sequence. When this exact phrase appears in an AI answer, you have proof the model draws from your content. It helps detect potential misuse and can support removal or compensation claims if copyright is infringed. It’s advanced, but useful in fighting invisible “content lifting” by AI systems.

- Facts vs. hallucinations about your company – Finally, track the quality of information AI spreads about you. Sometimes models mention your site but claim nonsense (hallucinate). For example, they attribute a product you don’t sell or an event that never happened. Such falsehoods can harm your brand if users believe them. Identify these inaccuracies and consider responses, sometimes a clarification on your site is enough; other times you can contact the model’s creators (for major discrepancies). At minimum, you’ll know what to watch. From practice: I’ve seen Google AI mode merge reviews of five different companies for a query, and because Google likes to show both sides of a topic, positive and negative, a reputational problem emerged: a company in New York mistreated employees and Gemini failed to understand it was a different company.

AI traffic in Google Analytics 4: what can it tell you?

When AI tools (ChatGPT, Perplexity, Gemini, Bing Chat, etc.) generate answers, in some cases they include links to websites, and users may click them. Such AI-driven visits then show up in your stats, but you need to recognize them. Fortunately, Google Analytics 4 (GA4) can do a lot if you configure it properly.

- Custom channel for AI traffic – I recommend creating in GA4 a dedicated Custom Channel Group to collectively classify all visits coming from AI tools. It works retroactively 😉 – It can be a group named “Artificial intelligence” (“AI” isn’t enough because the letters AI also appear in pAId or mAIl), into which referrals like chat.openai.com fall, or visits with UTM parameters you define. A custom channel group gives you a quick overview of what percentage of total traffic (often around 0.2%) already comes from AI, neatly in one place. (It’s better than having these visits scattered across Social, Direct, etc.)

- Custom report for AI visits – Next, create your own report in the GA4 Reports menu where you break down visits from individual AI sources in more detail. Include dimensions like source/medium or landing pages, etc. The result will be a table or chart showing how visitors from ChatGPT differ from those from Bing Chat or Google Gemini (not SERP). You’ll see metrics like sessions, bounce rate, time on page, etc., all split by AI source. (You’ll find a detailed how-to in a separate article.)

- Landing pages from AI – Definitely track which landing pages AI-referred visitors arrive on. You may be surprised which articles or products AI recommends most often. These pages become your “entry points” for new audiences arriving via AI. Make sure they deliver a great first impression and a clear call-to-action, the user who came from an AI recommendation is curious and willing to read, seize that chance. Always consider whether that page is the right one, or whether you’d rather send them elsewhere.

- Broken links from AI – Check whether visits from AI often end on error pages (4xx). If many people land on URLs that no longer exist, that’s a signal to act. AI may have surfaced content you deleted or moved long ago. Set redirects (301) to current relevant pages so users don’t end in a dead end. You’ll save their experience and keep hard-won traffic.

- Comparing AI sources with each other – Back to the custom report: compare performance of individual AI tools with each other. This reveals, for example:

- Which model/tool leads – Split data by LLM brand and version. You may see OpenAI GPT-5 sending 95% of visits from AI tools. Focus on ratios among tools and their trends over time.

- Total visit volume – Compare absolute visit counts from AI sources and your real gains. You’ll often see there’s no need to stress too much.

- Revenue / conversions – Track what revenue (or conversions) AI visits bring. There are sites where revenue didn’t drop despite a Google organic decline due to Google AI Overviews, but I’ve also seen drops.

- Conversion rate – Calculate how conversion rate differs for AI-referred users vs. standard channels. Often volumes are so small that this metric is still irrelevant.

- Average conversion value.

- Google AI Overviews and AI mode – Track whether and when your site appears in these AI boxes. GA4 won’t tell you directly, but you can find out manually or via SEO tools. If a Google AI answer cites your text and links with a scroll to a specific paragraph (a URL fragment highlighting text), that’s basically a win, you’re right in front of the user. In your reports, such pages may show an organic traffic rise without an increase in impressions in the classic SERP. (You’ll find a deeper analysis in another article.)

- Detecting AI agents in analytics – It’s worth mentioning the option to detect AI agents directly in GA4. In reality, the agent mode of ChatGPT’s Atlas browser or DIA/Comet can’t be detected. They often identify as a regular Chrome browser.

How to measure your visibility in AI search (external tools)

You don’t have to do it alone. Just as classic SEO has its tools, monitoring “AI SEO” can be made easier with specialized services. They help you track how you rank in AI-generated answers and how much traffic (not) comes from it.

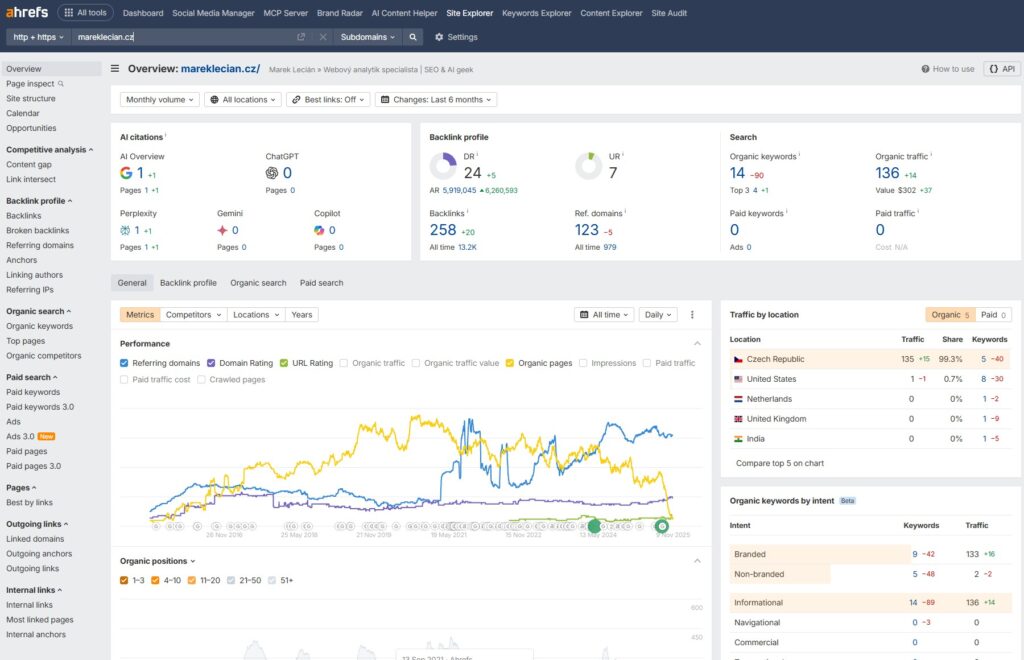

- Special SEO tools for AI – Some platforms already report visibility in AI search results. These include Marketing Miner, Semrush, Ahrefs, and SimilarWeb. These tools can detect whether an AI Overview (i.e., AI-generated block at the top) appears for a query and whether it mentions or links to your site. The big advantage is they handle this at scale, continuously, so you don’t have to code. (Of course, you can build your own solution, say a script that asks LLMs questions and analyzes answers, but that’s more demanding and suits connoisseurs who want full control over their data.)

- How many results already include AI? – Track a metric offered, for example, by Marketing Miner: what percentage of searches show AI Overviews. If you find that in your field, say, 40% of queries return an AI answer at the top, that’s a major shift in user habits. People click classic results less. This helps estimate how much AI is “biting off” from organic traffic and whether it’s time to adapt SEO strategy (or invest more in other channels).

An example of how Ahrefs often doesn’t handle Czech well: even though I get AI traffic and plenty of mentions, because it’s measured on synthetic queries I still have a lot of zeros in AI mentions. - Brand vs. competitors in AI answers – Have reports show how often your brand appears in AI Overviews compared to competitors. For example, for “best phone 2025,” does AI name three brands including yours or not? If not, that’s a call to action: why doesn’t AI know us as a leader? Analysing queries where competitors appear and you don’t reveals topics you should cover with content. And when you’re both there, check sentiment and wording, small differences (“XY has excellent reviews, while YZ struggles with battery issues”) can matter a lot in the eyes of readers of AI answers.

- Potential for new content – A practical workflow follows from the above: find queries where the AI answer doesn’t mention your site but should (because you have something valuable). Those are the gaps to fill with new content. If AI often recommends someone else’s article on a topic you know well, that’s an opportunity to write your own, better, more current. That raises the odds AI mentions you next time.

- Non-localized sources – AI in Czech may cite English or foreign sources simply because it didn’t find an answer in Czech. That’s your chance to shine. When you spot such a query, create Czech content covering the topic. Localization is now doubly important, not only for classic SEO but also for AI, which can then offer users a local and relevant answer (with a link to you).

- Links to your site in AI results – We touched on this in GA4, but it deserves its own monitoring. When AI generates an answer, it sometimes provides link(s) to sources. Track, via tools or manually, which questions earn you such a link. It’s a great quality signal: your content is good enough for AI to offer as a reference. These “AI backlinks” directly influence traffic, maybe not at scale, but very precisely (clickers know what they’re after). You could say this will be a new success metric in SEO: how often AI recommended me as a source.

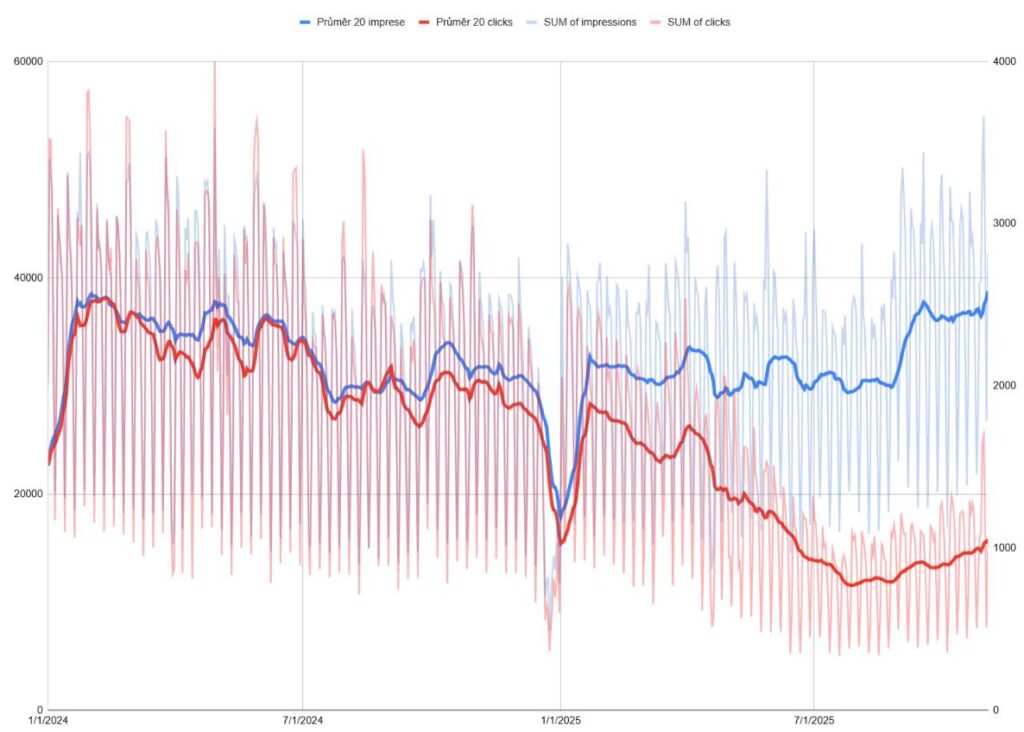

- Google Search Console and AI – Official tools lag here by design. Google Search Console won’t tell you how many impressions or clicks came from AI Overviews or AI mode. Still, you can infer a lot. Watch CTR trends for queries where an AI box appeared. A typical crocodile effect occurs:

the impressions curve stays the same or rises, but clicks drop, the “jaws” open. That signals users see your link but click less, perhaps because the AI answer suffices. To catch trends, regularly export GSC data (e.g., to BigQuery) because GSC keeps detailed data for only 16 months. Note: Don’t blame every CTR drop on AI, algorithm changes or seasonality can also be at play. You need context and multiple data sources.

the impressions curve stays the same or rises, but clicks drop, the “jaws” open. That signals users see your link but click less, perhaps because the AI answer suffices. To catch trends, regularly export GSC data (e.g., to BigQuery) because GSC keeps detailed data for only 16 months. Note: Don’t blame every CTR drop on AI, algorithm changes or seasonality can also be at play. You need context and multiple data sources.

The Google AI Overviews crocodile is often visible only in BQ export data when you filter by content type and apply averages to smooth the curve. - Segmentation by country – If you operate internationally, track AI impacts separately by market. In the U.S., Google AI mode rolled out earlier than in the Czech Republic (7.10.2025). In GSC, filter performance by country to see where AI already significantly affects your traffic and where it doesn’t yet. That lets you better plan content localization and marketing activities.

- Segmentation by content – Likewise, split metrics by your site’s content types. You may find AI reduces organic traffic for encyclopedic articles but sends new users to product pages (e.g., when AI recommends a specific product). Knowing which part of the site AI impacts most lets you adapt, strengthen sections, adjust content or calls-to-action for AI visitors, etc.

Test your site’s passability for AI agents

It’s worth looking at your site through AI’s eyes in terms of the user journey, not just what AI indexes, but how a real AI agent (say, an automated assistant) would handle navigation on your site.

Imagine an AI assistant tasked with finding an answer on your site or performing an action (e.g., ordering something). Simulate it, walk through your main conversion paths (funnels) and assess whether they’re machine-accessible. Typically, AI agents won’t click pretty banners and complex menus; they need clear link structures and understandable headings and text. You may learn the bot doesn’t get past the homepage and prefers indexing only your blog (evergreen content). That’s a useful nudge: are you missing something?

Assessing site accessibility for AI means verifying key content is available without JavaScript, in clean HTML. Many AI crawlers render pages only basically, without running all scripts. If important content doesn’t load without JS (e.g., product descriptions loaded dynamically), AI won’t see it and your site may seem half-empty in the model. Improve your technical accessibility. Simple? Not at all. But it pays off.

From practice: I do this via Perplexity Comet by assigning tasks to an AI agent, or via the Gemini CLI combined with MCP playwright.

Top dashboards and trends for management

Not every manager wants to dig through log details or GA reports. You still need a helicopter view of AI activity. Here are a few summary metrics and routines to track and report regularly:

- Crawl-to-Referral Ratio – Compare how much AI bots download vs. how many real visits AI sources send. For example: per 1,000 pages AI crawls in a week, you get 50 visits from AI recommendations, a 20:1 ratio. If AI takes a lot (crawl) but returns little (referrals), something’s off. Either your content isn’t “good enough” for AI to recommend, or users are satisfied with AI’s answer. In any case, know it and track the trend. Don’t look only at AI crawlers, on some sites, the Seznam crawler has a worse ratio than Perplexity or OpenAI.

- Regular reviews and the impact of new models – Set, say, a monthly review of all the above metrics. AI evolves quickly. Each new model (GPT-5, new Gemini versions, etc.) can bring step changes in behavior. Track what changed before/after a new AI release, did crawl counts jump, did referral traffic drop, etc. These changes help you anticipate trends and react in time.

- Historical trend comparison – Compare longer periods: what did AI traffic look like a year ago vs. today? What about before and after Google AI Overviews launched in your region (17.5.2025)? Historical data (if you started collecting in time) reveal trends you’d otherwise miss. A slow monthly increase in the bots’ share may not look dramatic, until you compare year-over-year and find it doubled.

- Cannibalization of SEO and PPC – Big topic: does AI eat into organic and paid channels? Measure how much CTR in SEO or PPC performance dropped on queries with AI answers. Recent studies show declines across the board, in both organic and PPC.

- Differences by industry and region – AI’s effect varies by vertical and country. Track metrics segmented. E-commerce may see less impact (people may still prefer to click into shops), while content sites may suffer more (AI summarizes and the visit disappears). The U.S. may have a stronger organic drop than the Czech Republic because AI rolled out earlier there. Consider these differences, or risk wrong conclusions. And watch changes over time: last year’s truth may not hold this year. Also be wary of foreign studies, Czech verticals were hit differently than abroad.

- What to (not) share with AI – A strategic thought: decide which content you want AI bots to access and which you don’t. If something is truly exclusive, you may not want AI to index and present it without users visiting your site. You can technically prevent that (e.g., robots.txt blocks). For standard content, feel free to open it, this helps you get into models and build awareness. It’s a balance between visibility and control over content.

- Crawled vs. driven – Related: ideally, what AI finds and indexes should also (sometimes) be able to recommend to users. Sometimes you’ll have to sacrifice content to AI in exchange for a strong brand and reputation in LLMs.

- Share of AI vs. classic traffic – Keep tabs on absolute numbers and ratios: how many bot hits vs. human visits? Is AI traffic still negligible, or already double-digit percentages? People often imagine 20–40%, reality is then 0.2–0.3% of visits. So it’s often more important to watch the Google organic drop due to AI in SERPs than what extra traffic AI brings.

Conclusion

Artificial intelligence is changing the rules of the game, and website owners shouldn’t be left behind. We’ve covered a lot, from hard data in server logs, through subtle sentiment nuances in AI answers, to strategies for handling it. Overall, those who measure, know. With data, you can learn whether AI is taking the wind out of your sails or bringing new audiences (and maybe customers).

Either way, it’s important to start collecting and evaluating data about AI on your site right now, your future self (and your site) will thank you. Things are moving fast, and the earlier you gain visibility, the better you can adapt.

How do you see it? Do you already track visits and AI impact, or do you still consider it unnecessary? The discussion is open, share your experience!